Latest Posts

-

Using Structured Output with LLMs

This post covers three approaches for getting structured output out of LLMs: use native structured output APIs when available, fall back to function calling for broader provider support, or combine careful prompting with validation as a universal solution.

This post covers three approaches for getting structured output out of LLMs: use native structured output APIs when available, fall back to function calling for broader provider support, or combine careful prompting with validation as a universal solution. -

Building a RAG from scratch

Learn to build a complete Retrieval Augmented Generation (RAG) system from scratch using Python. This hands-on guide covers everything from document chunking and embeddings to similarity search and LLM integration, with interactive 3D visualizations showing how semantic search actually works under the hood.

Learn to build a complete Retrieval Augmented Generation (RAG) system from scratch using Python. This hands-on guide covers everything from document chunking and embeddings to similarity search and LLM integration, with interactive 3D visualizations showing how semantic search actually works under the hood. -

Optimizing Inference for Image Generation Models: Memory Tricks and Quantization

Let's explore how I was able to run an image generation model, FLUX.1 Dev, with only 20% of its required total VRAM. Through quantization and memory optimization techniques, I'll show you practical strategies that make high-quality image generation accessible on consumer GPUs, complete with performance benchmarks and real-world examples.

Let's explore how I was able to run an image generation model, FLUX.1 Dev, with only 20% of its required total VRAM. Through quantization and memory optimization techniques, I'll show you practical strategies that make high-quality image generation accessible on consumer GPUs, complete with performance benchmarks and real-world examples. -

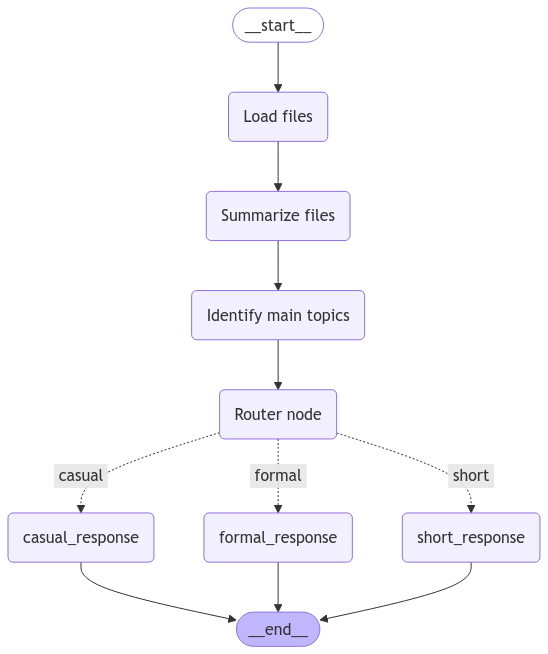

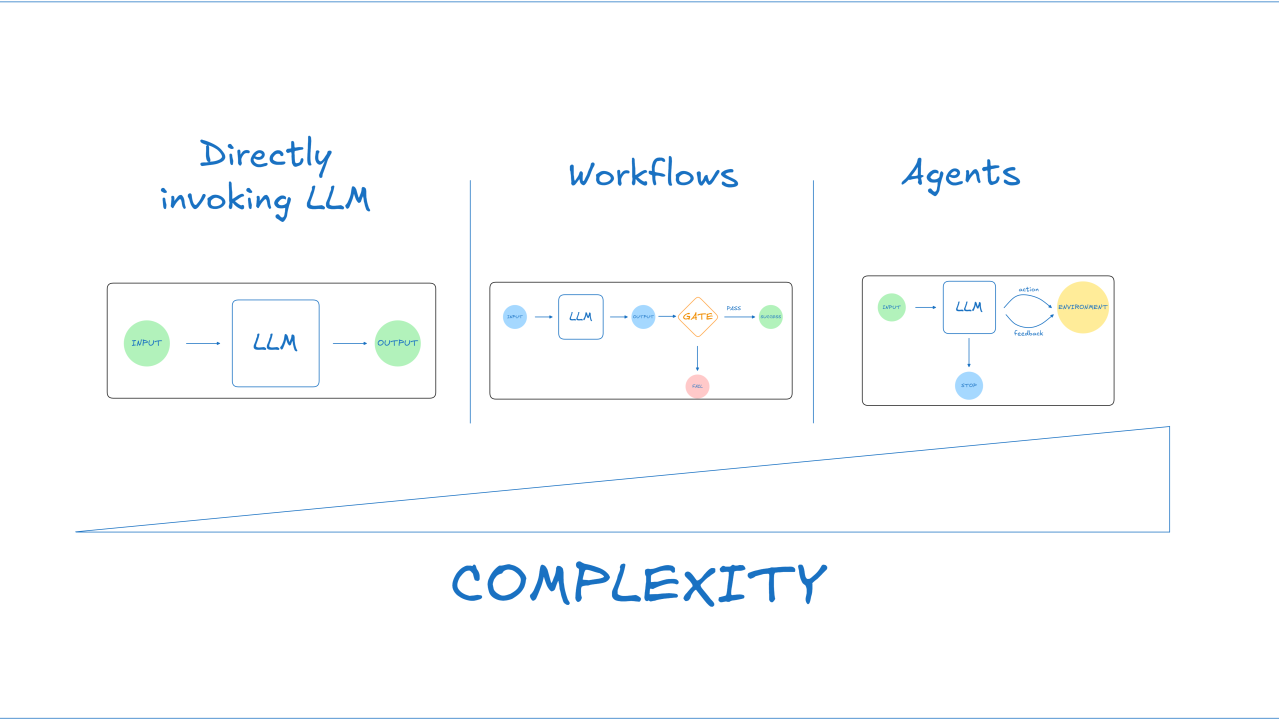

Agentic and workflows example implementations

Practical examples of agentic and workflow-based AI patterns, with code and design decisions inspired by Anthropic’s research. Learn how to implement direct LLM calls, prompt chaining, and routing workflows—while keeping your systems simple and maintainable.

Practical examples of agentic and workflow-based AI patterns, with code and design decisions inspired by Anthropic’s research. Learn how to implement direct LLM calls, prompt chaining, and routing workflows—while keeping your systems simple and maintainable. -

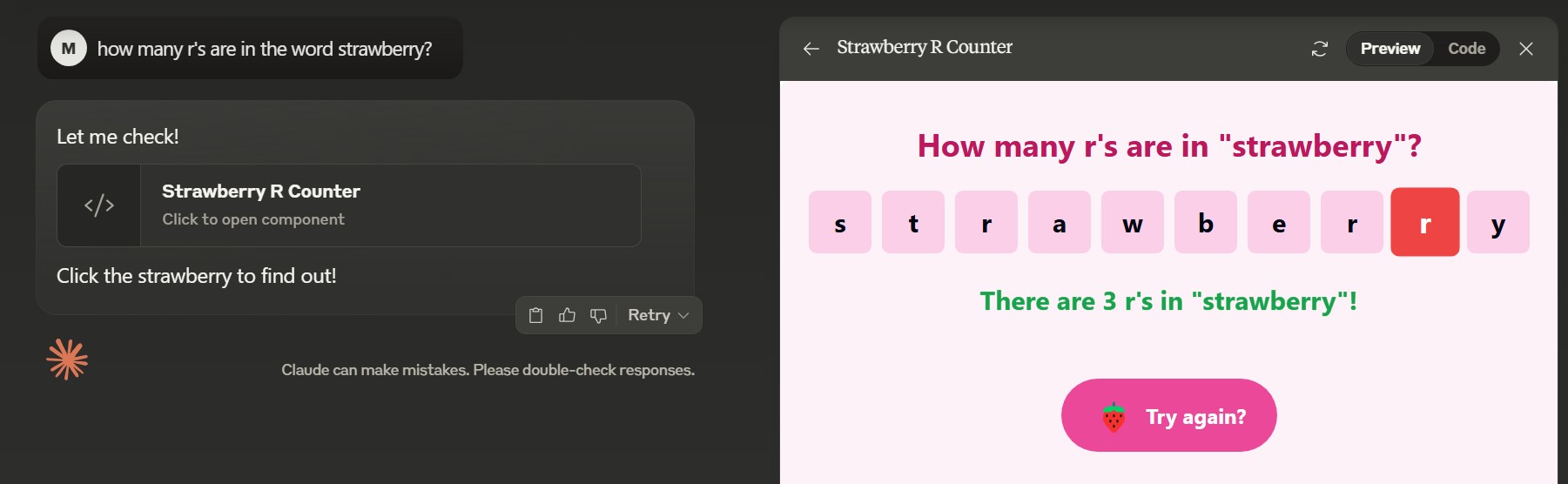

Vibe check for Claude Sonnet 3.7

Anthropic’s Claude 3.7 Sonnet introduces extended thinking, visible thought process, and impressive benchmarks. Here’s my first impressions and why this model feels like a big step forward for practical AI.

Anthropic’s Claude 3.7 Sonnet introduces extended thinking, visible thought process, and impressive benchmarks. Here’s my first impressions and why this model feels like a big step forward for practical AI. -

What is the deal with Agentic AI systems?

Are agentic AI systems always the answer? In this post, I explore why simplicity often beats complexity, when agent-based architectures make sense, and why you should reach for agents only when truly needed.

Are agentic AI systems always the answer? In this post, I explore why simplicity often beats complexity, when agent-based architectures make sense, and why you should reach for agents only when truly needed.